2 January

Why Hosting for Millions is Easier than Hosting for Thousands

Business

Programming

Web apps

min. read

Introduction

Web hosting in the cloud environment is a complex subject to describe. Why is such hosting easier for millions of users than for thousands?

In the last article, we touched on the slightly more technical side of how our applications work. Today, we will also take the time to explain a few issues that are, in turn, related to hosting so that they are clearly described and factually argue the effectiveness of our solutions. By also showing such behind-the-scenes aspects of our work, we will allow a better understanding of the mechanisms responsible for the success of our applications.

In this part, we will discuss containerization, reverse-proxy, and an IaC – Infrastructure as a Code. These aspects will shed light on managing the environment of the application.

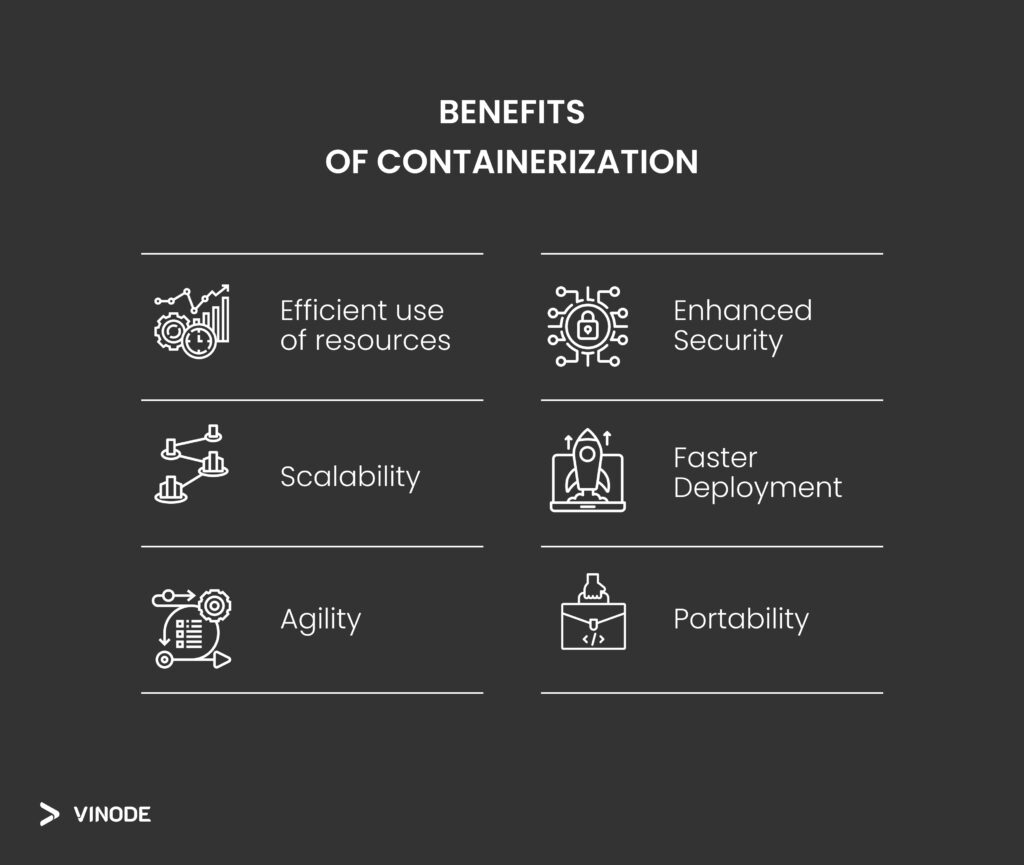

Containerization in a nutshell

Containerization is, in simple terms, the packaging of a single application into a secure so-called ‘container’ so that whoever gets in there for a particular reason cannot change the system. Thus, it is isolated. These days, containerization is divided into containers and virtual machines.

A virtual machine is an identical copy of an operating system running on what is called a virtual layer, but it runs from scratch; that is – if we imagine the system as a pyramid of interdependencies, all the floors of this pyramid must run in the virtual machine for it to operate at all.

Conversely, the container isolates only the highest level of this pyramid – the least burdensome. The operating system, meanwhile, is its fundament, and it is secure because no one from this container has access to secure methods, making it much more efficient. So, efficiency is critical because putting specific content in a container ensures good performance. Anything you need for a particular application can be thrown into a container – such as data volumes, additional applications, virtual environments, programming languages, system tools, libraries, and much more. So, by encapsulating all of this in a container, you can give it to any person, and no matter what operating system that person has, this container contains everything needed to run the app.

What is Docker

Docker is an open-source platform that automates the deployment, scaling, and management of applications, so in simple words, it’s a container platform.

If the container is packaged using Docker, it can be run on a single machine using Docker. Such a Docker-packaged container can be seamlessly launched on an individual device, given that Docker is installed on it. Running the container on a single machine using Docker is highly efficient and convenient, making it preferable for us to reduce server costs and simplify system administration tasks.

How can we quickly scale

The cost of cloud services may be low, but when there is one machine on which there is this one container, it can, for example, scale up to 1,000 users at a time. However, in situations where traffic is increased due to, for example, the addition of some post or promotion of a particular service, etc., the container becomes overloaded. The operating system communicates that the container is not responding, with the result that no one can connect to the page or can, but it takes so long to load that most give up.

However, we need a tool called Kubernetes to manage the growing number of Docker containers efficiently. If we are hosting for “millions” of people, Kubernetes is the best solution for such cases. Its equivalent is Docker-Swarm. However, we do not use it. Kubernetes is about managing multiple images on multiple machines simultaneously, so we have more than one machine. Between the internet and the containers, there is a load balancer (or load balancing technology) that distributes traffic between these containers. For example, we have one application and three containers so that you can maintain the traffic three times. We can also manually run some additional machines and configure Kubernetes, which will automatically deploy a container on them.

Here, however, comes the concept of updating such a container. Knowing that it responds to client traffic and we need to update it, it should be switched on and off. This, however, would cause downtime; you would then lose customers and traffic to the site. Such downtime could be a few seconds, but it could also be a few minutes, which would be detrimental to the application. However, with three machines, turning one off and on in a moment, we still have two machines running, which we also update sequentially. As a result, there is no downtime.

We could make such a container for our customers to host. Still, because we want to keep the quality of our solution as high as possible, it is much easier for us to host this with us and thus provide our customers with the peace of mind and security associated with hosting.

Example of the situation

With three machines, we also have ten customers whose traffic could be a lot higher. These three machines per 10 customers versus one machine per customer is already a more cost-effective solution. Another thing is that if one of these customers suddenly has increased traffic, we can scale up very easily and quickly because we have more computing power in store.

Reverse proxy

Reverse proxy is a tool for securing the aforementioned container against unauthorized access. Its task is to protect the container so that no one can get into it otherwise than planned, e.g., we allow access only via a specific port or the DNS address, i.e., HTTP. In this way, we secure the container from being accessed via the SSH protocol (Secure Shell)- allowing to log on to the machine and modify the content – developer mode.

According to reverse proxy, we should mention Nginx – an open-source web server software that we used, which acts as a reverse proxy and as a load balancer. Its operation leads to boosting performance.

If we have a URL.google.com, it’s DNS. If we have x.google.com, it’s a subdomain, and the reverse proxies can reroute it to different subdomains, which means we can have one reverse proxy, which will act as a load balancer simultaneously. By the way, we will be able to have client A.Vinode.io and client B.Vinode.io, and it handles that, too. Then, we still have three machines but a couple of different DNS addresses for each client separately. So, the most important thing here is we take care of having our reverse proxy adequately configured to route traffic correctly based on addresses.

Infrastructure as a code

A final point answering why it is easier to host at scale is the concept of IaC (infrastructure as a code). We use Terraform – an open-source infrastructure as a code software tool.

To cite the previous example again – we have three machines and don’t know exactly what the traffic will be, but we want to be prepared for any circumstances. The method that comes to our aid here is that if we expect a particular traffic, we can do a so-called hot start, which means that we prepare the machine waiting for this traffic, which will be re-routed if it comes. However, there is also such a thing as a cold start – traffic comes in, we reach a threshold, which means that we are about to get the limit (e.g., there is 30% capacity left on our servers), so we then do a cold start of a new server. Because we have this programmed into Terraform, when we reach a certain threshold, it communicates with our cloud provider and requests starting a new machine. Terraform will configure this new machine to add it to the set of machines and automatically start in Kubernetes as a new one. It will be in operation for some time, and if more are needed, they will be added as required. If the traffic is reasonably fast and grows like this, we can scale in response to requests.

Terraform also allows you to program peak traffic, i.e., we anticipate that there is no traffic between x and y hours, while between other hours, there is the highest traffic. Then, we need a minimum of 5 containers.

Conclusions

We have gone through some critical interlocking issues in the context of hosting. It is certain that for proper hosting, you need a specific infrastructure, the elements of which build up.

When realizing a 3D web application for your real estate business with us, you can rest assured that the hosting on our side is a very carefully looked after site, that plays one of the critical roles in the success of such applications.

You don’t have to worry about proper server management or technical matters like auto-scaling. With Terraform, we can scale whether the traffic is there or not, so we are prepared for any eventuality, and you don’t have to have an entire IT department to manage this infrastructure.

Contact us to discuss opportunities for cooperation, and check out our other articles to get familiar with what we do and how!

Official partners

Let's talk!

send the email

I hereby consent to the transmission of my data, via this form, to [email protected], where it will be reviewed by authorized personnel. We are committed to providing a prompt response to your inquiry. In the event that this form has been submitted erroneously or you wish to retract your data, please communicate your request by sending an email to [email protected]. Be assured that we uphold strict privacy standards and will neither disseminate your data to third parties nor inundate you with unsolicited correspondence.

I hereby consent to the transmission of my data, via this form, to [email protected], where it will be reviewed by authorized personnel. We are committed to providing a prompt response to your inquiry. In the event that this form has been submitted erroneously or you wish to retract your data, please communicate your request by sending an email to [email protected]. Be assured that we uphold strict privacy standards and will neither disseminate your data to third parties nor inundate you with unsolicited correspondence.